Hello,

I’d like to start a discussion about technical problems we might get when we switch to new front-end technologies, like web components or Vue.

I’m going to list the one I thought of to begin the discussion. But I would like to hear as many opinions as possible about those and the one I missed. I’ll probably start separate discussions later to discuss the identified issues separately.

When rendering pages server-side, the process is — roughly — the following:

- Gather data from various sources (e.g., databases, rest endpoints, etc.).

- Feed them to a template engine (i.e., Velocity in our case).

- Send the produced HTML to the client.

- The HTML is rendered in the browser.

When rendering pages client-side, the process is as follows:

- Directly return a minimal HTML page along with JavaScript dependencies.

- The JavaScript is evaluated and loads 1) a front-end rendering framework (i.e., Vue), 2) the business logic, and 3) the dynamic content, usually from REST endpoints.

- The rendering framework takes the dynamic content and produces the HTML of the actual page.

The main advantage of the client-side rendering is that it is easier and faster to update parts of the UI based on dynamic data than it is to reload the whole page.

But the initial process of loading the JavaScript dependencies and data can be slow and involves a JavaScript interpreter.

Initial page load performance

The first obvious limitation is the time to the first page load, especially when none of the resources are already in the browser cache.

Bad SEO

This is an indirect consequence of the initial page load performances. Web indexers can now interpret JavaScript, and based on what I could read, it seems to be reliable (see, https://www.reddit.com/r/vuejs/comments/18m9tzc/google_seo_for_vue_spa_research/, https://www.reddit.com/r/TechSEO/comments/103u6jz/vuejs_and_seo_will_clientside_rendering_destroy/, and Vue.js SEO Tips | DigitalOcean).

Though, I already identified limitations in the context of Cristal (see Loading...).

Therefore, there is a risk, and we need to define best practices to avoid hurting the SEO abilities of XWiki

Routes definition

So far, page url resolution is done client-side. With client-side rendering, and the absence of full page reload, url resolution must be done client-side.

Accessibility

Similarly, some user-assisting technologies might expect a full HTML to be rendered straight away, and cannot interpret JavaScript.

I’d be interested in @CharpentierLucas input about this.

Legacy support

Recent front-end frameworks can depend on new APIs that are not supported by older browsers.

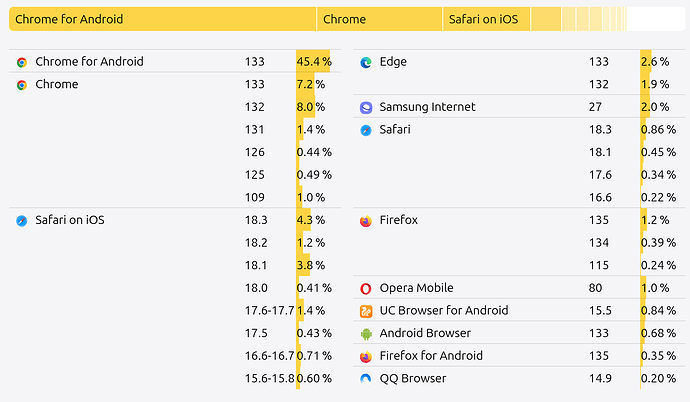

While our browser support rules (https://dev.xwiki.org/xwiki/bin/view/Community/SupportStrategy/BrowserSupportStrategy) is pretty permissive as we support only the latest version of Edge, Chrome, and Firefox. This is leaving a lot of users behind, by summing up browsers with a small user base. See Browserslist

WDYT? Thanks

Technical documentations: