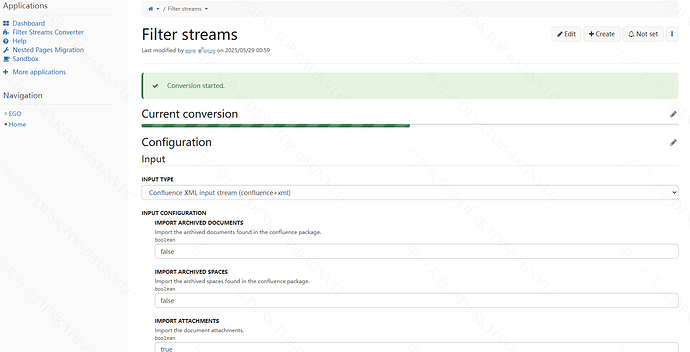

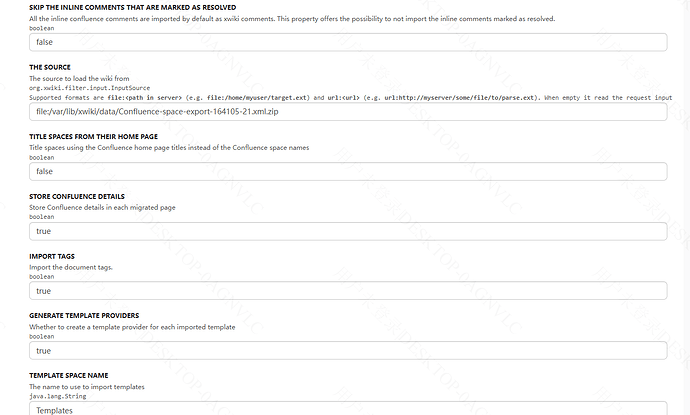

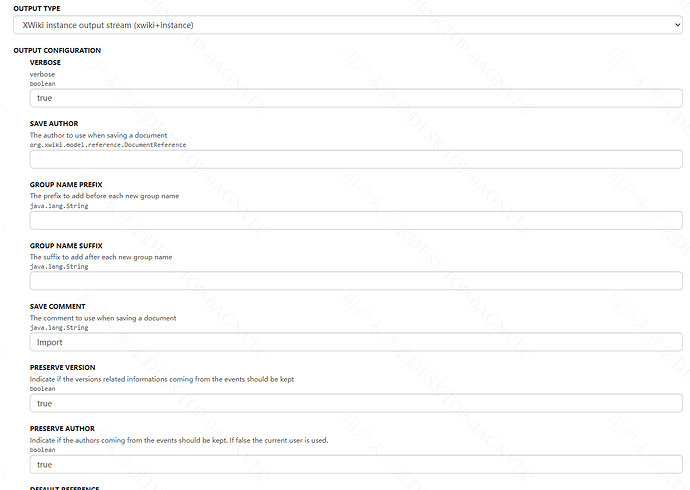

【Question】

We need to migrate data from Confluence to the XWiki platform. During the testing phase, we exported a 20MB XML-format ZIP package from Confluence and uploaded it to the XWiki server. We then tried to import the data into XWiki through the web interface. Although the import was successful, the entire process took two hours. While monitoring the server during the import, we noticed that only one CPU core was running at 100%, and the system did not utilize multiple cores. How can we optimize this issue?

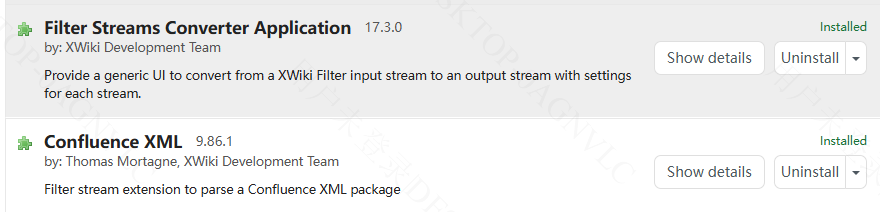

【version】

17.3.0

【extension】

You should find the bottleneck of the import using a Java profiler and either optimize the performance or parallelize the parts that are the bottleneck. For example, if saving pages is a bottleneck, instead of saving pages directly in XWiki, you could add support for adding them to a queue and process that queue with a thread pool (you’ll just need to make sure that all revisions of a page are still saved in order/by the same thread, but different pages could be saved in parallel). Currently, importing in XWiki is mostly optimized for simplicity and correctness, not necessarily for performance as import is usually a one-time task and thus high performance usually isn’t that crucial. Nevertheless, we would certainly consider code contributions that improve the performance of the import.