I am trying to make use of llm so that users can ask xwiki question to get response based on the Wxiki pages I add.

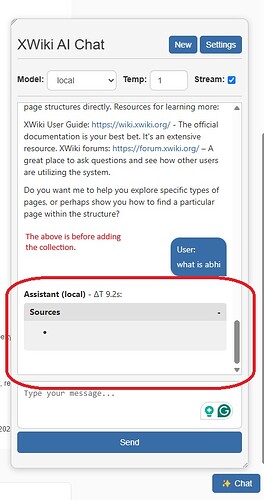

I created a local model using gemma3:1b llm model. The XWiki AI Chat works fine when no collection is added to the context.

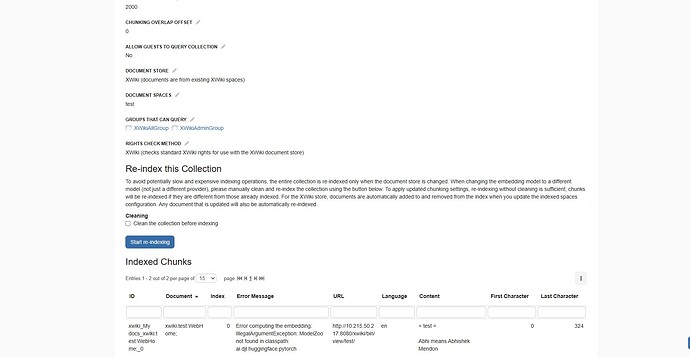

I added one wiki page (test) with a one line content to a collection (My docs). After this when I ask the XWiki AI Chat I only get an empty response.

Any help is appreciated. Thanks in advance.

Pako

3

Same here. Have you ever figured out how to fix this?

The LLM App is a great addition to XWiki and I would love to use it in production.

It works fine as long as no collection is added.

As soon as you add collections the response takes forever.

However, I noticed the Stop button disappearing while waiting for the response.

It seems like the connection is lost while waiting for an answer.

Any help would be appreciated.

Jubei

4

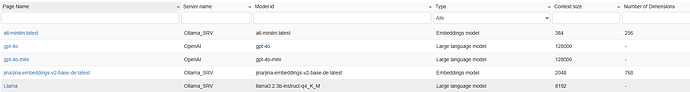

You need an embedding Model for the Collection.

LLama 3.2 with all-minilm or jina-embeddings-v2 is working for me.