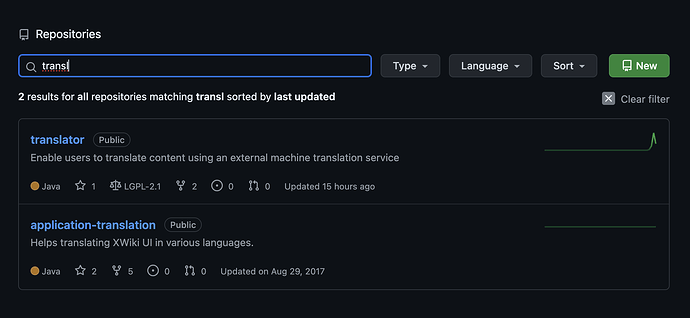

That sounds like a great addition to XWiki! I think translator sounds like a good project name.

Do you plan to offer translator integrations beyond DeepL? I’m thinking in particular about services like LibreTranslate, an open source, self-hostable machine translation API.

How do you plan to preserve XWiki syntax during the translation? I did some experiments with LibreTranslate, and it basically destroyed a lot of syntax, randomly loosing or duplicating syntax elements and translating parameter names like “width” or “height”, giving results like [[Bild:CollectionsOverview.png||alt="Collections Übersicht, zeigt zwei Kollektionen in einer Tabelle" Höhe="421" Breite="960"] (the second ] was indeed lost). I just did a similar test with DeepL, and it seems better, but it still replaces quotes, leading to syntax like [[image:CollectionsOverview.png||alt=„Collections overview, showing two collections in a table“ height=„421“ width=„960“]]. LibreTranslate has some support for HTML, but in a test it lost HTML comments which would make it a bit useless for our annotated HTML, but it could still be an interesting solution - if necessary, we could simply improve LibreTranslate, taking advantage of the fact that it is open source. In any case, I have the impression that properly preserving formatting is a major challenge.

I also tried translating with LLMs and what I found is that they are much better at preserving XWiki syntax and recognizing which parts could/should be translated, but the translation is sometimes less direct, and LLMs tend to invent content, in particular when there is, e.g., an unfinished sentence in the original content. I had the impression, though, that an LLM-based translation could be a great input for human review. Further, it is relatively straightforward to provide an LLM further instructions about, e.g., the style of the translation, how to translate certain terms etc. That said, using LLMs comes with a heavy performance/computational resource cost, and it is also not straightforward to deal with the context limit, even with generous input limits, many LLM providers limit output to 4096 tokens, making it much more difficult to translate longer pages. So while there are some nice advantages, it is absolutely non-trivial to get a robust translation system based on LLMs that can be used in practice.

I also had some thoughts about using LLMs for updating translations where I think they could be a big advantage. We could provide both the existing translation and the update, e.g., as diff with some context, as input, and then the LLM could produce an update that takes the existing translation into account to only change what was actually changed and to keep the style of the translation or how certain words have been translated in the updated parts.